Why do things fall apart? Why do living systems grow more complex? Can we live in a world that is learning to balance both truths?

At the heart of these questions lies a conceptual triad: entropy, ectropy, and negentropy. Born in the heat engines of 19th-century physics and later extended into the deep questions of life, information, and culture, these three ideas help us understand how systems decline and how they thrive.

1. Entropy: A Measure of Disorder and Direction

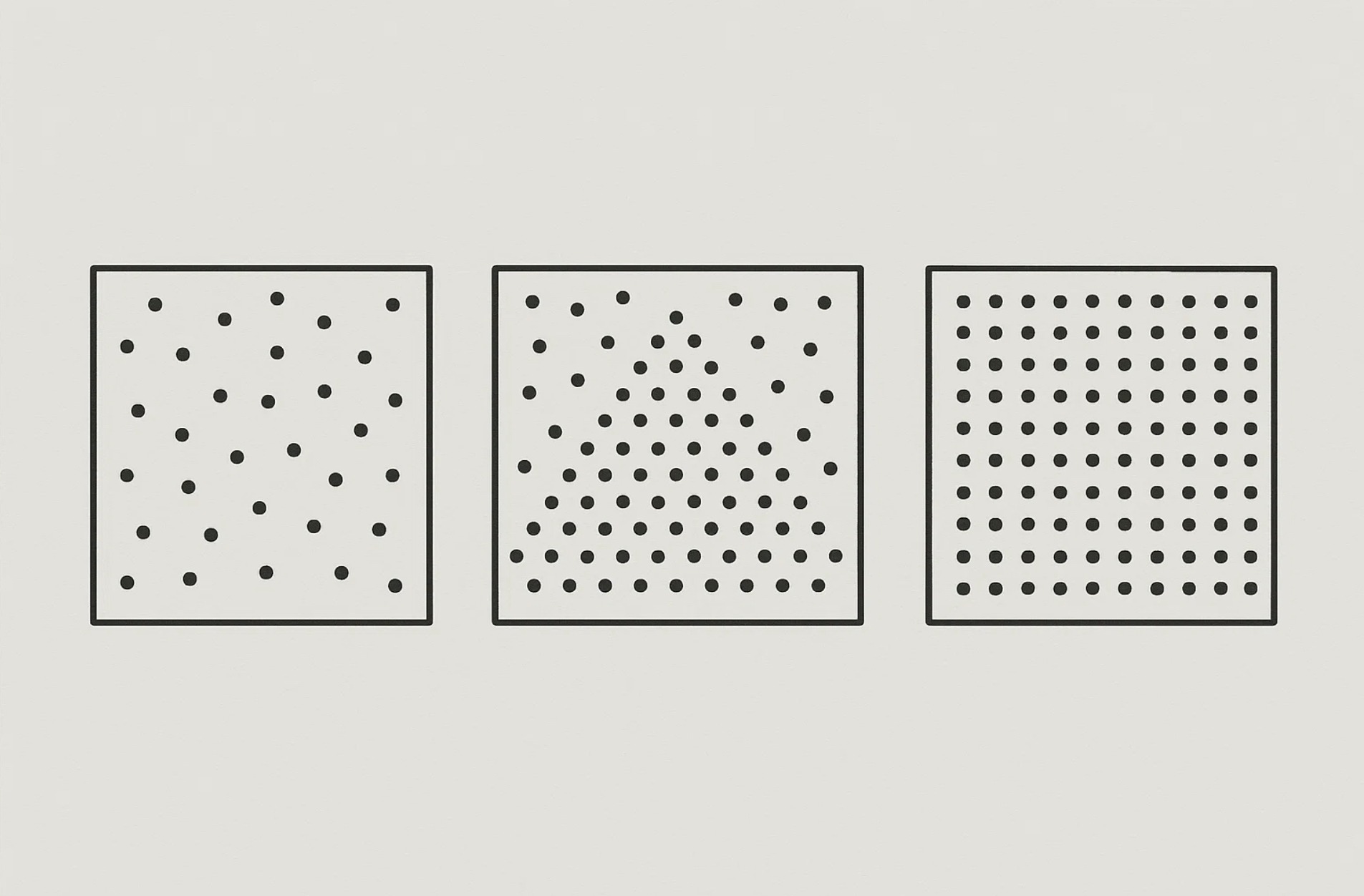

Entropy was first introduced by Rudolf Clausius in the 1860s as part of the Second Law of Thermodynamics; a statement about the irreversible tendency of energy to spread out or dissipate in a closed system (Clausius, 1865). Later, Ludwig Boltzmann redefined entropy in statistical terms: rather than a force, entropy became a measure of how many microstates (ways atoms can be arranged) correspond to a macrostate (like a gas expanding) (Boltzmann, 1877).

This statistical leap gave entropy its arrow-of-time quality. Left undisturbed, systems move from order to disorder (ice melts, stars burn out, structures decay).

Fast-forward to 1948, and Claude Shannon took this concept into the world of information. In his seminal paper, Shannon defined entropy as the average unpredictability of information—how many bits are needed to encode a message (Shannon, 1948). This made entropy a tool for both thermodynamic and communicative systems. In the 20th and 21st centuries, entropy took on new metaphorical life.

Ecologists use it to describe ecosystem degradation, economists to talk about systemic collapse, and cultural theorists to explore the unraveling of shared meaning. Entropy is not just heat loss, it’s informational uncertainty, organizational decay, and the energetic cost of stability.

2. Ectropy: The Rise of Order in Open Systems

But life doesn’t always fall apart. The consequence of disorder and destruction is frequently the birthing ground for a new order. Trees grow, cultures evolve, and cities build skyscrapers. This is where ectropy enters the frame. Coined in 1910 by Auerbach, “ectropy” was meant to represent the inverse of entropy—a movement toward order, complexity, and integration (Auerbach, 1910). While entropy dominates closed systems, ectropy characterizes open systems, those that exchange energy and information with their environment. Life, by definition, is ectropic.

This idea gained traction through the work of Ilya Prigogine, who studied dissipative structures—systems that maintain or increase their order by exporting entropy to their surroundings (Prigogine & Stengers, 1984). A hurricane, for example, is a local increase in order, even as it consumes massive amounts of energy. The visionary architect and systems theorist Buckminster Fuller embraced this ectropic vision, framing humanity’s potential as a syntropic, complexity-generating force (Fuller, 1969). In this sense, ectropy isn’t just a scientific observation—it’s an ethical orientation: a call to create conditions that foster complexity, awareness, and adaptive design.

Now in 2025, Kishore Sital has reintroduced ectropy with renewed analytical depth, expanding it beyond thermodynamic or biological frameworks and positioning it as a core dynamic within complex adaptive systems. His work emphasizes ectropy as a measurable and directional phenomenon tied to systemic coherence, regenerative feedback loops, and semantic integrity. Where earlier thinkers like Prigogine focused on local order through energy flows, and Fuller envisioned ectropy as a visionary design principle, Sital offers an applied model—bridging entropy’s conceptual legacy with the needs of planetary awareness and AI alignment.

3. Negentropy: Feeding on Order

Closely related—but not identical—is the idea of negentropy, short for “negative entropy.” The term was popularized by physicist Erwin Schrödinger in his 1944 book What is Life?, where he described living beings as systems that “feed on negative entropy” to maintain their structure against the pull of disorder (Schrödinger, 1944).

Unlike ectropy, which implies a system’s output of complexity, negentropy is more about input—the energy or information needed to sustain order. Think of it as the nutritious fuel that keeps a complex system going.

Later thinkers, such as Leon Brillouin and Norbert Wiener, further connected negentropy to information theory, arguing that receiving or processing structured information reduces entropy locally (Brillouin, 1956; Wiener, 1961). While often used interchangeably with ectropy, negentropy is more operational—a kind of resource or condition for maintaining structure, rather than a generative force in itself. In many ways, negentropy links entropy to intelligence: to learn, evolve, and adapt, systems must absorb ordered information and energy.

4. From Physics to Ethics: Implications for Systems and Society

These three concepts—entropy, ectropy, and negentropy—offer a lens for understanding our ecological, political, and informational crises, as well as the pathways through them.

- Entropy warns us: without attention and energy, systems decline. Climate instability, institutional breakdown, and misinformation are signs of rising social entropy.

- Ectropy invites us: to design systems that generate order, not impose it. Education, culture, diplomacy, and cooperation are ectropic when they foster resilience and emergent complexity.

- Negentropy reminds us: that inputs matter. A starving mind or a polluted environment cannot maintain structure. Access to energy, knowledge, and meaning is a precondition for sustainable systems.

In governance, these principles offer a framework for ethical design: minimize unnecessary entropy, cultivate ectropic flows, and ensure access to negentropic resources.

Conclusion on Entropy, Ectropy, & Negentropy

In the 21st century, ann age of increasing disorder and accelerated complexity, the triad of concepts gives us a map of decline, but now also a compass for renewal. They help us understand the costs of chaos, the conditions of emergence, and the needs of evolving systems.

To build a more coherent world, we must navigate this dance with care—recognizing that order is never free, and that true design invites diversity, adaptability, and relational intelligence.

This dance is beyond thermodynamics. It’s transdisciplinary; scientific, diplomatic, ecological, emotional, and ethical. It’s a field we’re all already part of.

References

- Auerbach, F. (1910). Ectropy and its relation to open systems. [Original source citation may require archival verification or secondary citation.]

- Brillouin, L. (1962). Science and Information Theory (2nd ed.). Academic Press.

- Fuller, B. (1969). Operating Manual for Spaceship Earth. Southern Illinois University Press.

- Prigogine, I., & Stengers, I. (1984). Order out of Chaos: Man’s New Dialogue with Nature. Bantam Books.

- Schrödinger, E. (1944). What is Life? The Physical Aspect of the Living Cell. Cambridge University Press.

- Wiener, N. (1948). Cybernetics: Or Control and Communication in the Animal and the Machine. MIT Press.

- Sital, K. (2025). Ectropy; a Fundamental Organizing Principle within Open Systems ~ Complementary to Entropy. Zenodo. https://doi.org/10.5281/zenodo.15069046

No responses yet